Supporting Reliable, Interoperable and Efficient AI Infrastructure at Scale

Data center architectures are rapidly evolving, with 2026 marking a pivotal inflection point toward AI-native networks. This shift is redefining workload characteristics, driven by distributed AI/ML training, large-scale inference, and high-performance computing (HPC) operating across the fabric. As these demands intensify, data center infrastructure must evolve in lockstep to deliver the scale, performance, and reliability required for AI-driven operations.

The scale that AI demands results in data center operators deploying millions of links for the scale up and scale out bandwidth. Each link becomes absolutely critical to the whole performance. If one link flaps, the whole workload stalls and the impact on AI tasks, power, utilization, and throughput is profound.

A new level of physical layer link reliability is required for such AI at scale. With millions of links being deployed, reliability and margin must meet new levels. Test and measurement must do more than analyze link flaps; it needs to indicate the margin in every element in the link to make sure each optical link operate with significant margin, as even one flap in a million can cause massive impact. Forensic insight into each link to validate design margin and stability is critical.

In the following blog, we examine how VIAVI test solutions enable reliability, scalability and interoperability as data centers evolve. This will also be a core focus at VIAVI’s booth 5B18 at MWC Barcelona 2026, where we will be showcasing our comprehensive L0–L7 solutions portfolio and highlighting how we have integrated our foundational physical layer expertise with high-speed Ethernet and network performance capabilities from the acquisition of Spirent’s High-Speed Ethernet, Network Testing and Channel Emulation (HSE / NS / CE) business.

By addressing the entire stack, we are enabling operators and hyperscalers to transition from component-level testing to holistic, AI-ready infrastructure validation.

The Interconnect Bottleneck

Data center compute has advanced significantly quicker than bandwidth, memory, and interconnects, which can be a key bottleneck in the data center. Meta presented research showing that for three of the talk’s four cited LLMs, compute can be sitting idle for over 35% of the time just waiting for data to transfer in and out of the chips. In one case, this figure was over 57% of idle time.

There is, therefore, considerable pressure to implement the fastest interconnect for both training and inference: 400G, 800G, 1.6T and soon 3.2T Ethernet and optical technologies. There is also pressure to ensure that these are working as efficiently as possible.

The shift to AI-native networks means traditional throughput tests are no longer sufficient to guarantee a network is handling AI workload and related dynamics. Testing for these architectures instead requires strategies to undertake fabric-aware validation methods that model the specific, high-pressure traffic patterns unique to AI.

Achieving predictable performance across racks, clusters, and sites requires a unified approach to validation that includes:

- Emulating Real Workloads

Firstly, validation techniques will need to be fabric aware. Achieving this requires the modeling of simply generic traffic patterns. Doing so ensures the network is capable of handling the all-reduce operations and east-west traffic flows in large-scale GPU synchronization.

To achieve this, the high-fidelity emulation of GPU workloads will be needed, which will include emulating Collective Communication Library and LLB traffic pattern on RoCEv2 transport. Taking this approach will allow an understanding of how the network handles the kind of traffic jams that are unique to these data centers, which occur when thousands of processors attempt to share computed data simultaneously.

- Validating Scalability

As data center architectures scale out, stress-testing is needed for both multi-rack leaf-spine and super-spine configurations. To address this requirement, validation will be needed for up to 100Tb in order to provide a realistic view of behavior under pressure. Key metrics here will include latency, packet loss, and throughput under load. Validation will seek to quantify the stability of large-scale AI clusters during peak utilization.

Testing should also account for failure and congestion scenarios, including congestion cascades caused by a single failure. This can be achieved through the emulation of high-density port configurations and scaling scenarios.

- Ensuring Interoperability

The rapid migration to 800G and 1.6T also creates challenges in terms of interoperability across these multivendor environments. Automated testing platforms will therefore be needed to troubleshoot high-speed interconnects across optical, electrical, and Ethernet layers simultaneously. Unified orchestration of Layer 0–2 workflows is essential to enable scalability as architectures evolve.

It will therefore be vital to undertake a detailed analysis of the physical layer (including PAM4 and FEC performance) when verifying auto-negotiation and link training (AN/LT) behavior.

- Securing the Inference Layer

As AI moves to the edge, it becomes inference-heavy and distributed. This creates more vulnerabilities and performance bottlenecks that are more difficult to verify and secure.

To mitigate this, teams must emulate real clients/users to generate high-fidelity AI inference workloads that simulate dynamic prompts, multi-turn conversations, and varying context lengths. This will enable teams to characterize and benchmark inference clusters with key metrics such as time to first token (TTFT), concurrency behavior, and response accuracy.

The inference layer must be hardened against emerging threats such as prompt injection and API layer abuse. Stress-testing these systems involves simulating adversarial prompts and high-rate abuse scenarios to validate that AI safety and security controls are robust.

VIAVI at MWC 2026: The AI Data Center Zone

At MWC Barcelona 2026, VIAVI will showcase our latest offerings for AI data centers at Hall 5, booth 5B18. We will demonstrate how we enable operators, hyperscalers, neoclouds, and enterprises to scale infrastructure for intelligence.

Key demonstrations include:

AI Datacenter Interconnect Testing:

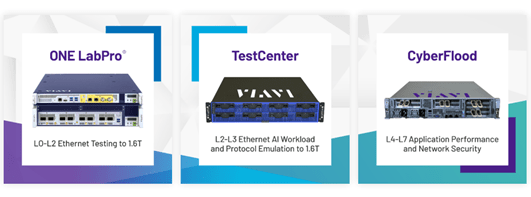

See how to accelerate the validation of 1.6T/800G/400G links using the VIAVI ONE LabPro. This demonstration will focus on physical layer performance, PAM4, and FEC performance with nanosecond-level precision.

Scale Up and Scale Out Network Validation:

An emulation of super-spine architectures using the VIAVI TestCenter to create real AI traffic patterns for RoCEv2 and CCL. This includes the measurement of congestion behavior and throughput under massive loads to ensure AI clusters deliver predictable performance.

AI Inference Testing:

See how to stress-test inference systems end-to-end and capture TTFT and latency data using VIAVI CyberFlood. Through this demonstration, we will highlight how to simulate adversarial prompts to ensure AI-driven services are robust and production-ready.

In addition to solutions for AI data centers, VIAVI will also showcase technologies for secure and quantum-safe architectures, mission-critical communications, autonomous operations, 6G, and AI-RAN. We look forward to seeing you there!